Fortune 1000 organizations spend approximately $5 billion each year to improve the trustworthiness of data. Yet only 42 percent of the executives trust their data. According to multiple surveys, executives across industries do not completely trust the data in their organization for accurate, timely business-critical decision-making.

In addition, organizations routinely incur operational losses, regulatory fines, and reputational damages because of data quality errors. Per Gartner, companies lose on average $14 million per year because of poor data quality.

These are startling facts – given that most Fortune 500 organizations have been investing heavily in people, processes, best practices, and software to improve the quality of the data.

Despite heroic efforts by data quality teams – data quality programs simply failed to deliver a meaningful return on investments.

This failure can be attributed to primarily the following two factors:

- Iceberg Syndrome: In our experience, data quality programs in most organizations focus on what they can easily see as data risk based on past experiences, which is only the tip of the iceberg. Completeness, integrity, duplicate, and range checks are the most common types of checks that are implemented. While these checks help in detecting data errors – they represent only 20% of the data risk universe.

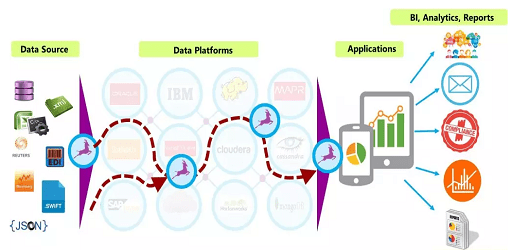

- Data Deluge: The number of data sources, data processes, and applications have increased exponentially in recent times due to the rapid adoption of cloud technology, big data applications, and analytics. Each of these data assets and processes requires adequate data quality control to prevent data errors in the downstream processes.

You may also like AutoIntelli AIOps Platform to Automate IT Infrastructure and Solve Complex IT Problems

While the data engineering teams can onboard hundreds of data assets in weeks, the Quality teams usually take between one to two weeks to establish a data quality check for a data asset. As a result, data quality teams prioritize data assets for data quality rule implementation leaving many data assets without any type of data quality controls.

DataBuck (https://firsteigen.com/databuck/ ) from FirstEigen addresses these issues for the data lake (https://firsteigen.com/aws/ ) and data warehouse environment. It establishes a data fingerprint and an objective data trust score for each data asset (Schema, Tables, and Columns) present in the Data Lake and Data Warehouse using its ML algorithms. Trust in data will no longer be a popularity contest. There is no need to have individuals give their subjective opinions on the health of a table/file. All stakeholders can universally understand the objective Data Trust Score.

More specifically, it leverages machine learning to measure the data trust score through the lens of standardized data quality dimensions as shown below:

- Freshness: Determine if the data has arrived before the next step of the process

- Completeness: Determine the completeness of contextually important fields. Contextually important fields should be identified using various mathematical and/or machine-learning techniques.

- Conformity: Determine conformity to a pattern, length, and format of contextually important fields.

- Uniqueness: Determine the uniqueness of the individual records.

- Relationship: Determine conformity to the intercolumn relationship within micro-segments of data

- Drift: Determine the drift of the key categorical and continuous fields from the historical information

- Anomaly: Determine volume and value anomaly of critical columns

With DataBuck, data owners do not need to write data validation rules or engage data engineers to perform any tasks. DataBuck uses machine learning algorithms to generate an 11-vector data fingerprint to identify records with issues.

You may also like A Brief Introduction To Artificial Intelligence In Marketing

Another impressive feature is that the Data Fingerprint approach reduces false positives. For a Fortune 500 industrial company, DataBuck reduced false alerts related to data quality issues on the Internet of Things (IoT) sensor data by 85%. This helped the company save over $1.2 million.

Data is the most valuable asset for modern organizations. Current approaches for validating data, in particular Data Lake and Data Warehouses, are full of operational challenges leading to trust deficiency, time-consuming, and costly methods for fixing data errors. There is an urgent need to adopt a standardized ML-based approach for validating the data to prevent data warehouses from becoming a data swamp.

![]()